Shared input and recurrency in neural networks for metabolically efficient information transmission

Abstract:

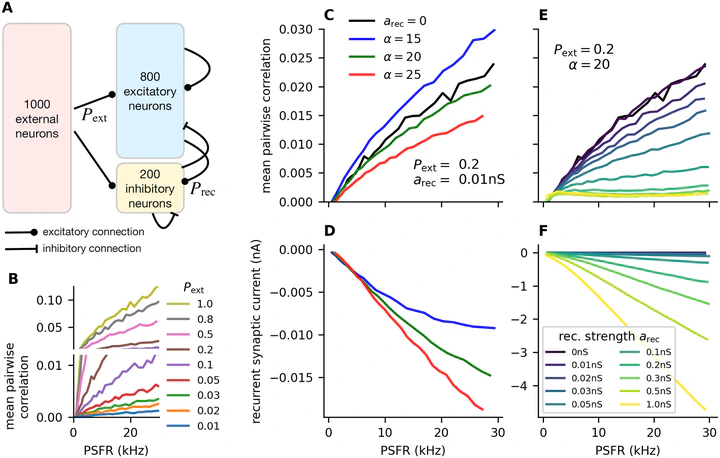

Information processing in neurons is mediated by electrical activity through ionic currents. To reach homeostasis, neurons must actively work to reverse these ionic currents. This process consumes energy in the form of ATP. Typically the more energy the neuron can use, the more information it can transmit. It is generally assumed that due to evolutionary pressures, neurons evolved to process and transmit information efficiently at high rates but also at low costs. Many studies have addressed this balance between transmitted information and metabolic costs for the activity of single neurons. However, information is often carried by the activity of a population of neurons instead of single neurons, and few studies investigated this balance in the context of recurrent neural networks, which can be found in the cortex. In such networks, the external input from thalamocortical synapses introduces pairwise correlations between the neurons, complicating the information transmission. These correlations can be reduced by inhibitory feedback through recurrent connections between inhibitory and excitatory neurons in the network. However, such activity increases the metabolic cost of the activity of the network. By analyzing the balance between decorrelation through inhibitory feedback and correlation through shared input from the thalamus, we find that both the shared input and inhibitory feedback can help increase the information-metabolic efficiency of the system.